One of the most ubiquitous tropes in politics is members of Congress finding ways to blame society’s ills on something new that they don’t understand. Rock music, violence in video games, and profanity on television all had their turn as the societal scapegoat in recent decades. The most recent flavor of moral panic is social media — in particular, lawmakers have begun to cast their suspicious gaze upon the mysterious algorithm as a potential public nuisance in need of their regulation.

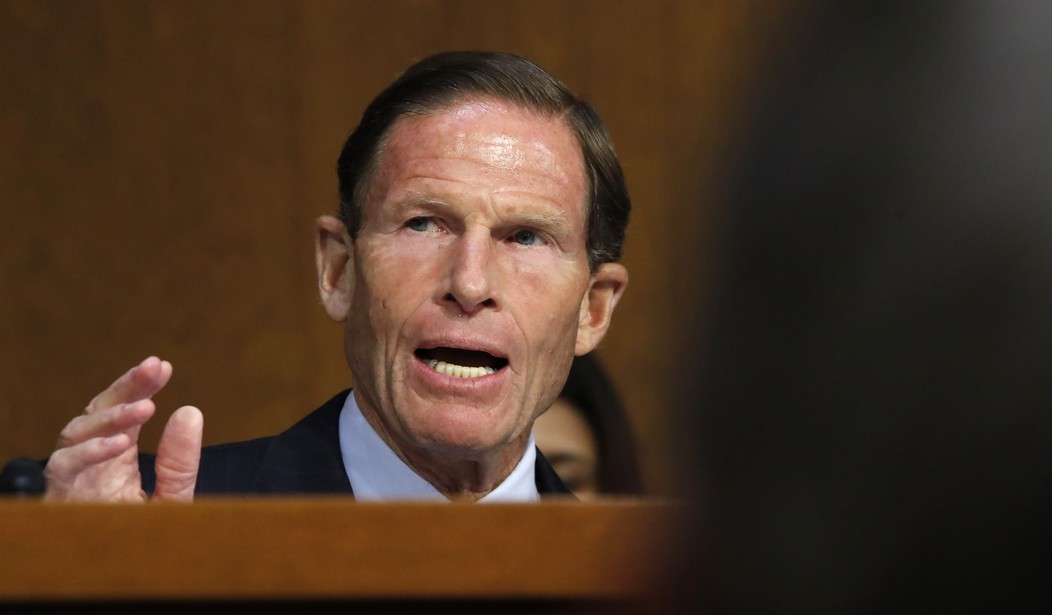

The story goes that the algorithms being used to rank what content is displayed on users’ social media feeds are amplifying political radicalization, dangerous misinformation, and inflicting psychological harm on users. Senator Richard Blumenthal (D-CT), Chairman of the Senate Subcommittee on Consumer Protection, even questioned “whether there is such a thing as a safe algorithm.”

Though we tend to take it as a given, a platform hosting user-generated content would be an unsatisfying morass if it did not employ some system to tailor what is displayed according to each users’ preferences. That’s why nearly every such platform analyzes users’ searches and interactions and uses that to inform algorithms, so that each person doesn’t have to sort through thousands of posts that don’t interest them to get to content they want to engage with.

Politicians have fixated on this as a predatory practice; an industry-wide conspiracy to warp your brain. The truth is that literally every successful product or service offered anywhere succeeds by offering consumers more of what they want. Ultimately, that is all these ranking algorithms are: pieces of code filtering content in a pre-set way according to user inputs.

It’s true that some people may always be vulnerable to being fed more and more of the kind of content that leads them to a bad place, whether it be someone with body image issues or someone with extreme political views who seeks to constantly reinforce their biases. But that is not a problem unique to digital mediums; social media does not exist in a vacuum.

Recommended

While studies leaked by Facebook employee-turned-whistleblower Francis Haugen are being spun as evidence of social media giants knowingly boosting engagement to promote harmful interactions for profit, the studies themselves paint a more nuanced picture. While a minority of teens polled professed to feeling that Instagram made them feel worse about themselves, the large majority felt the platform was neutral or beneficial to them.

For one of the studies revealed by Haugen’s leaks, Facebook cut off the News Feed content ranking algorithm for .05% of their users and observed the reaction. Rather than seeing engagement plummet, Facebook actually made more money from advertising, as users had to scroll more to find interesting content (and thus ran across more ads).

This evidence suggests that supposed solutions proposed by lawmakers, such as the “Filter Bubble Transparency Act,” which would require social media platforms to allow users to disable algorithmic ranking of content, won’t solve anything. Academic studies of other platforms such as YouTube have also reinforced that how users interact with content online is not as easily manipulated as just tweaking an algorithm. Users will still gravitate towards content that interests them — it will just take them longer.

As companies design platforms to allow people to connect and interact with one another from across the globe, all of the foibles that characterize human interactions in the physical world inevitably manifest themselves online as well. Leaning on platforms to solve societal ills is likely only to create new problems, as we have already seen with platforms attempting to be “responsible” by policing “misinformation.”

Social media is still relatively new, and exists in an ecosystem of information sharing that includes broadcast networks, talk radio, and old-fashioned, face-to-face human interaction. Perhaps politicians should be more willing to recognize that no law or regulation is going to suffice to solve complex societal problems which pre-date the internet, no matter how scary or nefarious they make algorithms sound.

Join the conversation as a VIP Member