Just when you thought A.I. couldn't get any creepier.

A popular artificial intelligence-powered photograph enhancer is allegedly producing A.I.-generated child pornography with the faces of its users, according to one woman who wanted to see what her future baby would look like, as part of a TikTok trend.

Digital creator Asia Marie Williams is claiming that the viral Remini application turned pictures she uploaded of herself into a half-naked image of a toddler-aged child. However, the little girl's private parts, which Williams said she censored with an emoji when sharing the A.I. creation, were rendered "blank like a Barbie doll," the Remini user claimed in a Facebook post earlier this month.

Others alleged in the comments section that Remini has also produced inappropriate images of A.I.-created children depicting their nipples visibly poking through skin-tight leotards. Days later, another woman on Facebook claimed that Remini similarly generated a toddler wearing a crotchless bodysuit. "Whole little genital area out and showing?!" the Facebook user wrote.

Recommended

The subscription-modeled service's automatic A.I. "training process"—fully accessible to the tune of Remini Pro's premium $9.99-a-week price plan—takes relatively little time, with user "consent" being the legal basis Remini relies on to adapt its algorithm.

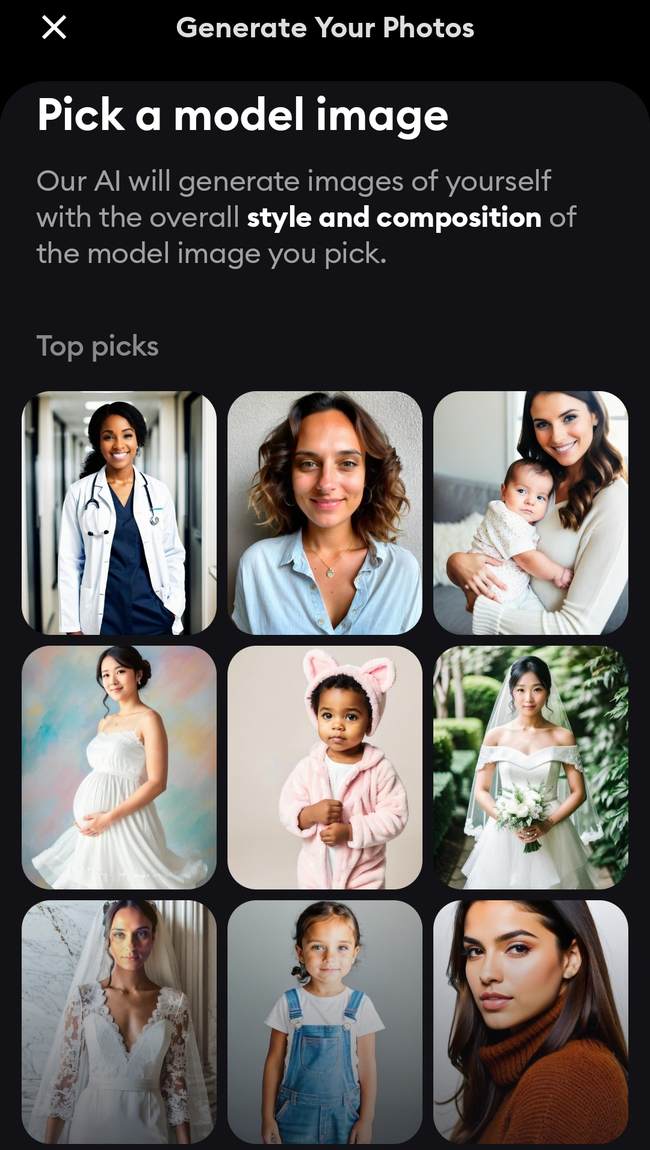

In a matter of minutes, after selecting anywhere from four to 12 selfies to "teach the A.I. what you look like," users can "effortlessly train" Remini's generative A.I. technology to output hyperrealistic, "breathtaking" portraits. But, because the A.I. results are based on components of user-inputted content, Remini advises users to "choose carefully!" from the camera roll.

Screenshot of A.I. "training process" via Remini app

Screenshot of A.I. "training process" via Remini app

In one TikTok video, a man alleged that upon uploading an array of pictures, Remini produced a preschooler in a jockstrap.

"Ya'll, I'm about to hire a lawyer and sue Remini for this sh*t," TikTok creator Roman Novian, a Texas-based Coldwell Banker real estate agent, said in the 39-second clip. "My lawyer will be speaking to them [...] This is concerning," the TikTok-vlogging realtor added, noting that he did not input any underwear-clad uploads or nude photos of himself, except for a barechested example.

"Everything was [from] my head up. One shirtless photo," Novian stated. "That's it. This is crazy."

The baby A.I. filter is one of many Remini offers, including templates that allow users to see themselves in wedding dresses and pregnant in maternity wear. Touting millions of users who have downloaded the mobile app from either the Apple Store or Google Play, Remini has quickly become one of the go-to platforms consumers gravitate towards to produce professional-quality LinkedIn headshots, delete facial blemishes for "picture-perfect" social media posts, and age-up (or de-age) subjects.

Ok like I’m so obsessed with this remini app. Tiktok has me obsessed lol pic.twitter.com/AGctzzaNPl

— Sumsum223 (@HadleyReine) July 29, 2023

Rated "E for everyone" with a "4+" age rating, Remini in mid-July dethroned Meta's failed Twitter competitor Threads, securing the top spot as the most popular free-to-download iPhone app, as Associated Press reported. But, the general-purpose editing is not what made Remini famous. The baby-generator app is triggering baby fever among today's social media-addicted population.

Yeaaahhhhhh!!!!!!

— Garfield’s Cousin🐯 (@rajah_alamari) July 10, 2023

I’m having baby fever. 🥹🥰😍 #remini pic.twitter.com/hil01jD18x

Remini's developer, Bending Spoons Apps ApS, offers a suite of A.I. tools, like video-editor Splice, whose Terms of Service (TOS) has come under fire as "questionable" and "intrusive" to users. In part, the TOS says that by submitting, storing, sharing, publishing, or otherwise making publicly available any user-generated content, you grant the tech start-up "a worldwide, perpetual, irrevocable, non-exclusive, royalty-free, sub-licensable, and transferrable license to publish, use, reproduce, publicly display, publicly perform, modify, adapt, translate, create derivative works from, reverse engineer, broadcast, distribute, exploit..."

"You will not be entitled to compensation for any use by us, or our agents, licensees or assignees, of such User-Generated Content. You acknowledge and agree that you have no right to review or approve how such User-Generated Content or any Name and Likeness will be used. We will have no obligation to publish or use or retain any User-Generated Content you submit or to return any such content to you," the TOS stipulates. In other words, (the TL;DR version): the app's privacy policy says, essentially, it can store your data for perpetuity, use it royalty-free, distribute it, and even exploit it. The regulation standards for dealing with data and IP rights are still being defined, leading many to inadvertently give up private media to A.I. applications.

(During the onset of the COVID-19 pandemic, Bending Spoons was chosen by the Italian government to design and develop Italy's official coronavirus contact-tracing app Immuni, which tracked patients who tested positive, under the direction of the Special Commissioner for the COVID-19 Emergency, the Ministry of Health, and the Minister for Technological Innovation.)

As for Remini, the app outlines its protocols for storing and deleting facial data and biometrics on its servers. In regards to minors, "We do not knowingly collect, sell, or share personal data about users under the age of 16," the app's creators specify.

Remini has not yet responded to Townhall's request for comment on the child-pornography allegations.

Customers using any of the developer's A.I. features are prohibited from "Uploading, generating, or distributing content that facilitates the exploitation or abuse of children, including all child sexual abuse materials and any portrayal of children that could result in their sexual exploitation," the Bending Spoons privacy page says. Although, child-protection advocates point out that contending with an international company, such as the foreign Italy-based Bending Spoons, presents a complicated, complex legal framework that could hinder the reporting of TOS violations, in which sexual predators use the apps for malicious purposes.

Artificial intelligence's evolution has erupted what analysts call a "predatory arms race" on dark-web pedophile forums, where predators can create troves of lifelike images depicting child sexual exploitation within seconds. On one pedophile forum with 3,000 members, about 80% of respondents to an Internet poll indicated they'd used or intended to use A.I. tools to manufacture CSAM (child sexual abuse images), ActiveFence's head of child safety and human exploitation Avi Jager told The Washington Post.

On June 5, the Federal Bureau of Investigation (FBI) issued an alert announcing that the agency has observed an alarming trend of cyber-predators transforming media, often of children, "into sexually-themed images that appear true-to-life in likeness to a victim, then circulate them on social media, public forums, or pornographic websites." Per the FBI's public service announcement:

The FBI is warning the public of malicious actors creating synthetic content (commonly referred to as "deepfakes"a) by manipulating benign photographs or videos to target victims. Technology advancements are continuously improving the quality, customizability, and accessibility of artificial intelligence (AI)-enabled content creation. The FBI continues to receive reports from victims, including minor children and non-consenting adults, whose photos or videos were altered into explicit content. The photos or videos are then publicly circulated on social media or pornographic websites, for the purpose of harassing victims or sextortion schemes.

The images have also ignited debate on whether A.I. child porn violates federal laws banning illegal material when the victims don't exist, a grey zone that's been hotly contested. Still, child-safety watchdogs argue that A.I.-created CSAM poses a real-world societal harm as fodder used to normalize the sexualization of children or to frame the exploitative practice as commonplace.

In 2002, the U.S. Supreme Court struck down several provisions of a 1996 congressional ban prohibiting virtual child pornography, concluding that the wording was too broad and could even encompass criminalizing depictions of "teen sexuality" in pop-culture literature. At the time, Chief Justice William H. Rehnquist wrote in his dissent, predicting many of the modern-day ethical concerns surrounding A.I. advancement: "Congress has a compelling interest in ensuring the ability to enforce prohibitions of actual child pornography, and we should defer to its findings that rapidly advancing technology soon will make it all but impossible to do so." Legal experts say the SCOTUS ruling from two decades ago merits revisiting in this technological age.

Join the conversation as a VIP Member